The technology sector flourished in 2025, with AI taking centre stage. Massive investments in data centre capacity by the likes of OpenAI, Meta Platforms and Google fed into a boom that some have predicted is a bubble waiting to burst.

Perhaps the main beneficiary of AI’s rise in 2025 was chip maker Nvidia, whose market capitalisation breached the US$4-trillion mark in July – the first company in history to do so. Then, in October, its valuation peaked at just over $5-trillion – another first.

AI will undoubtedly continue to be an influential force in 2026, but there are other technologies, like silicon-carbon batteries, 2nm chips, neuromorphic computers and other advances that may also have considerable impact on the sector and the world at large. Here, then, are TechCentral’s top 10 technologies that are likely to have an impact in 2026.

1. Silicon-carbon and silicon-carbide battery technology

Using silicon-carbon anodes instead of graphite allows for larger battery capacities in a smaller form factors. Meanwhile, the use of silicon carbide as a semiconductor makes for chargers that are more efficient. This not only makes using gadgets more convenient but promises to reduce the charging time of electric vehicles. In 2026, silicon-carbon and silicon-carbide technology is likely to expand into electric vehicles, laptops, cellphones and a host of other gadgets.

2. Agent-to-agent economics

Initially developed by Google and now managed as an open-source project by the Linux Foundation, the Agent2Agent (A2A) protocol is an emerging standard for inter-agent communication. As agents gain greater autonomy, many will be given the ability make commercial decisions without any human intervention. Picture an AI-powered smart-home system that can use its own telemetry data to assess the accuracy of monthly utility bills and even send queries to the municipality in case of disputes. Service providers can in turn have their own AI agents handling those queries, allowing them to settle disputes autonomously.

3. Neuromorphic computing

A major flaw of the current approach to AI computing is that it is resource intensive. At the data centre level, this leads to more electricity being used, more heat generated as a by-product and more water needed for cooling.

Attempts to find more efficient methods of AI computing include a peek into using biological material, instead of silicon chips, as a processing material. Fred Jordan, co-founder and CEO of Swiss biocomputing firm FinalSpark, calls this living material “wetware”. Some firms, like FinalSpark, use human skin cells to build neurons that do the computing.

Expect to hear more noise about this in 2026, though the technology is far from going mainstream.

4. Humanoid robot workers

Vehicle manufacturer Hyundai and Google DeepMind have confirmed their first orders of Boston Dynamics’ Electric Atlas robot. These humanoid workers can lift up to 50kg and have 360-degree mobility in their joints, allowing them to perform repetitive tasks in warehouse and factory-like environments.

BMW, meanwhile, is moving its humanoid plant worker pilot, which it ran in 2025, into production. BMW’s robots watch human workers performing tasks and then learn by copying them. The Figure 02, tested at BMW’s Spartanburg plant, moved 90 000 metal sheets in 2025, according to the company.

5. 2nm chips

In 2026, the world’s foundries, such as those owned and operated by Taiwan’s TSMC and Korea’s Samsung Electronics, are moving their production to more advanced 2-nanometre manufacturing nodes. The move promises to produce chips that perform 10-15% more than the previous generation of 3nm chips at the same power level. Alternatively, users may experience a 25-30% reduction in power consumption for the same performance, according to TSMC.

Consumers can probably expect to see these new high-end chips in the iPhone 18 and Nvidia’s Vera Rubin GPUs, scheduled to enter the market in late 2026. AMD is also working on 2nm computer chips, expected either late this year or early in 2027. Moore’s Law isn’t dead yet.

6. Photonic computing

In traditional chips, electrons carry signals by moving through copper and silicon. This generates considerable heat. In data centres, the heat problem scales with the amount of hardware deployed, leading to performance degradation, impaired hardware health and higher maintenance costs. The rise of generative AI and its massive compute demands has only magnified these constraints.

Using light may prove to be far more efficient as it produces far less excess heat, leading to more consistent performance while keeping hardware healthier for longer.

Read: Surgeons make South African history with first robotic kidney donation

Broadcom released its Tomahawk 6-Davisson network switch in 2025, which can carry more than 102.4Tbit/s of data using photonics.

Nvidia launched its Vera Rubin architecture at the 2026 CES event in Las Vegas. According to Nvidia, Vera Rubin chips will perform 3.5x more efficiently because more of them are clustered together – their performance would degrade due to excess heat if they did not use photonics.

7. Continuous authentication

Even with the advent of multifactor authentication through push notifications on specialised credential management apps, cybercriminals continue to find ways to exploit users and gain access to sensitive information. In 2026, the cybersecurity industry is honing in on ways to assess continuously a user’s authenticity using a mix of physical and digital markers, without them having endlessly to enter their credentials or verify their biometrics.

Jason Lane-Sellers, director of fraud and identity for Europe at LexisNexis Risk Solutions, outlined how some of these tools work in a September 2025 interview with TechCentral. Some markers include device-specific information such as IP addresses and IMEI numbers. More sophisticated is telemetry data such as typing speed, rhythm and gait analysis that can give an application service provider hints as to whether the person using the device is who they say they are.

8. Stablecoins

The US’s introduction of landmark stablecoin legislation in July 2025 in the form of the Genius Act has laid the foundation for stablecoin adoption to grow rapidly in 2026. It has helped legitimise their use among institutional investors and the general public.

As a result, the number of stablecoins in circulation has increased dramatically, with the combined market capitalisation of the investment type hitting a record highs above $283-billion by the end of 2025.

Read: Sarb weighs stablecoins and CBDCs as digital money gains global momentum

In 2026, stablecoin adoption may be driven by innovations not visible to the user. An example of this is Visa’s December 2025 launch of a USD Coin settlement layer, meaning that when a customer taps their card to make a payment, the underlying infrastructure uses stablecoins and blockchain technology to facilitate immediate interbank settlement. Other service providers like Stripe use similar technology to allow crypto users to pay for goods and have them settled in fiat currency at merchant outlets.

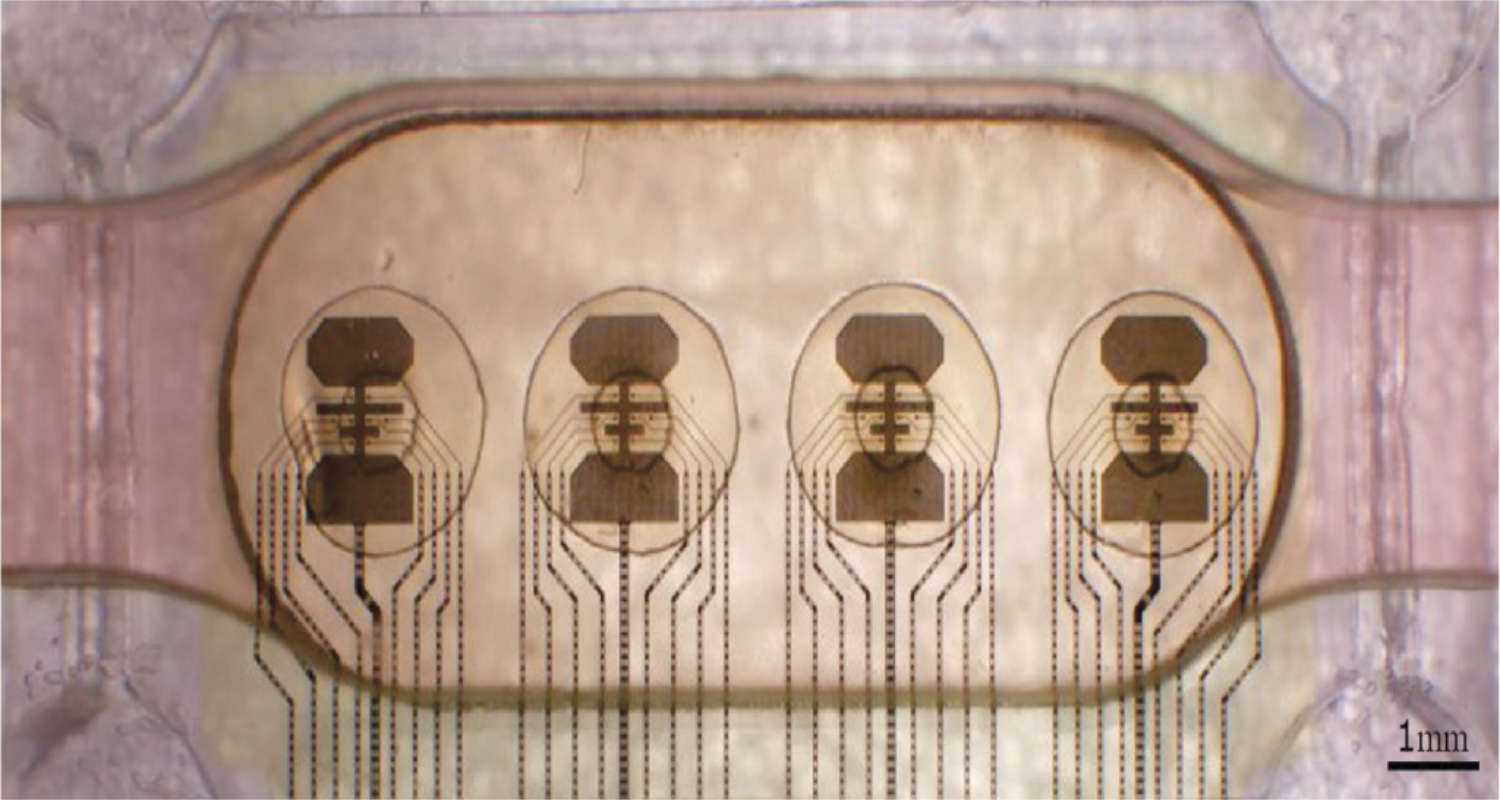

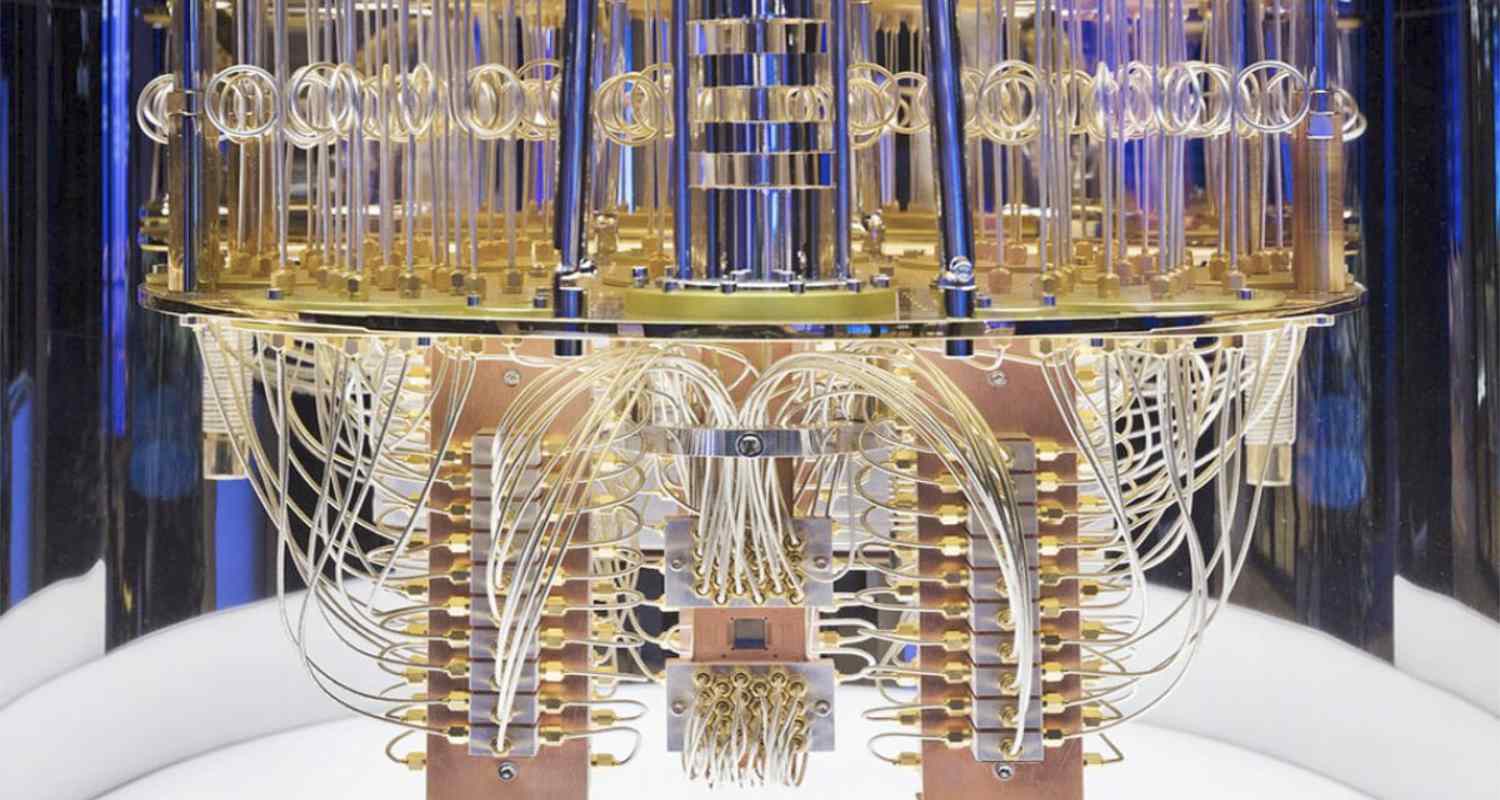

9. Quantum in manufacturing

For all their processing power, quantum computers are not yet ready for primetime. They are notoriously expensive to build, run and maintain. They are sensitive to external environment factors, often heavier than a house and need teams of scientist to maintain just the right conditions for them to function.

This has not stopped forward-looking organisations from using early quantum computing to take their manufacturing capabilities to the next level. Using cloud access to quantum processors offered by IBM, BMW and Boeing are using quantum annealing – a specialised methodology for solving combinatorial problems – to manage factory schedules with over 150 000 simultaneous constraints.

What used to take hours of classical computing time now takes just minutes to complete. Quantum computing is also playing a role in quality control, where quantum sensors are being used to detect imperfections in 2nm chips that traditional optical sensors would miss.

10. Domain-specific language models

The rise of generic large language models such as OpenAI’s ChatGPT and Google’s Gemini has come as a boon to productivity, but this hasn’t been without drawbacks. Generic LLMs are prone to hallucination, are costly to train and run, and are often slow in generating responses to complex queries.

Read: Nvidia’s next AI chips are in full production

In contrast, domain-specific language models are cheaper to run and, while consuming fewer computing resources, they run faster, too. Domain-specific models work like a specialist to provide higher levels of utility to subject-matter experts. – © 2026 NewsCentral Media

Get breaking news from TechCentral on WhatsApp. Sign up here.